Most brands still struggle to measure the true impact of their efforts. Relying on flawed testing methodologies, incomplete data, or platform-reported metrics can lead to misleading conclusions and wasted budgets.

Advertisers must adopt gold-standard experimentation practices and rigorous, well-structured tests that generate accurate, actionable insights to navigate this landscape effectively. Whether it’s testing new channels, optimizing budget allocation, or validating attribution models, a well-designed experiment ensures that marketing decisions are based on incrementality, causality, and real business outcomes, not just vanity metrics.

In this blog, we’ll break down the best practices for setting up high-quality advertising experiments that drive smarter marketing investments and sustainable growth. Let’s dive in.

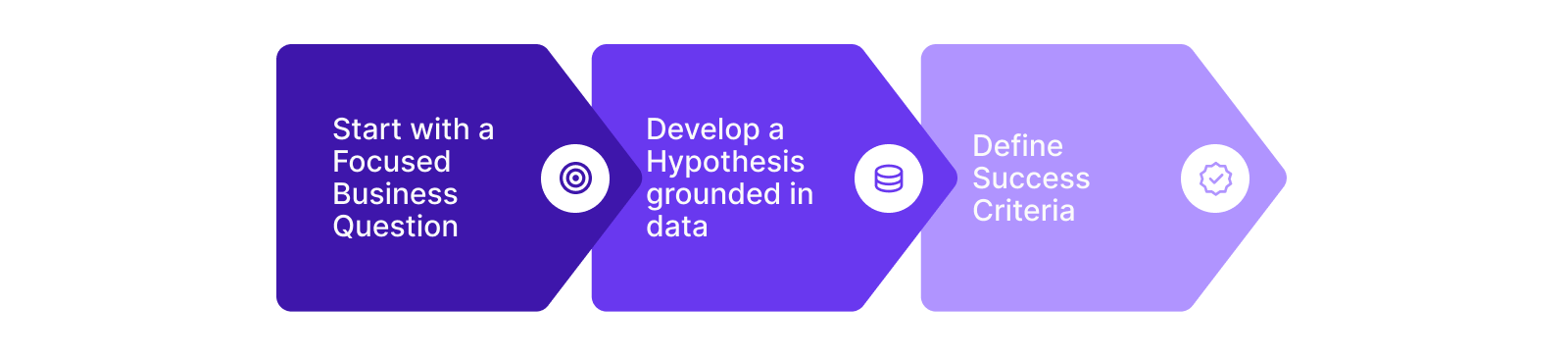

1. Define a Clear Business Question and Hypothesis

Running experiments without a well-defined objective is like navigating without a map; it leads to confusing results, wasted budgets, and inconclusive insights. Brands risk misinterpreting outcomes or making decisions based on flawed assumptions without a structured hypothesis.

To ensure your experiment delivers actionable results:

- Start with a focused business question. Instead of vague goals, pinpoint what you need to learn. Example: “Does increasing TikTok ad spend drive incremental conversions, or does it cannibalize organic traffic?”

- Develop a hypothesis grounded in data. Use past performance trends, industry benchmarks, and MMM insights to set expectations. Example: “If we increase TikTok spend by 30%, we expect a 20% uplift in incremental purchases.”

- Define success criteria. Set measurable thresholds that determine whether to scale, pivot, or pause. Example: “If ROAS improves by 20% or more, we will reallocate additional budget to this channel.”

A structured business question and testable hypothesis set the foundation for reliable, data-backed decisions, helping brands avoid guesswork and maximize marketing impact.

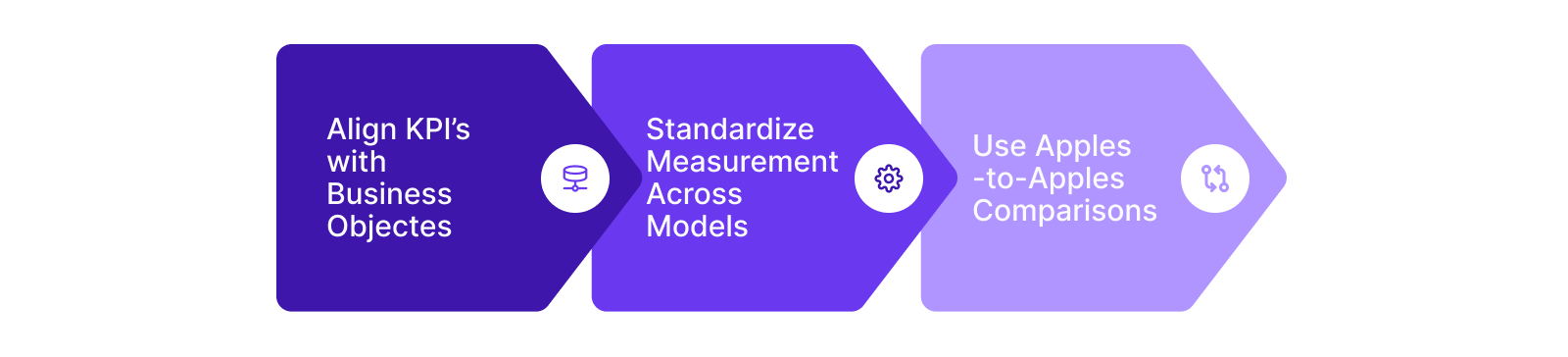

2. Prioritize KPI Parity for Accurate Measurement

Misaligned KPIs across different measurement models, such as attribution, MMM, and incrementality testing, can lead to inconsistent insights, making it challenging to trust experiment results. If other tools define success differently, marketers risk basing budget decisions on incomplete or conflicting data.

To ensure accurate measurement and reliable comparisons:

- Align KPIs with business objectives. Ensure that the metrics tracked in experiments map directly to overarching goals like revenue growth, customer acquisition, or profitability.

- Standardize measurement across models. Attribution models may report on immediate conversions, while MMM looks at long-term trends. Experiments must bridge these perspectives for more meaningful insights.

- Use apples-to-apples comparisons. If your experiment measures incremental ROAS, compare it against MMM-modeled ROAS, not platform-reported ROAS, which may contain inflated or over-attributed conversions.

By ensuring KPI parity, marketers can confidently interpret experiment results, make more precise budget decisions, and optimize campaigns based on real impact rather than conflicting data points.

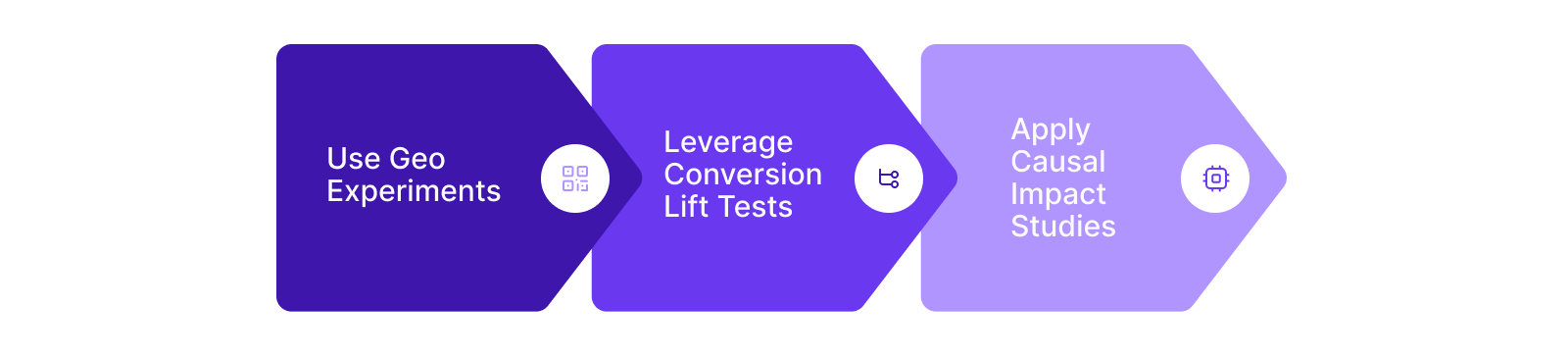

3. Choose the Right Experiment Design

Not all experiments are created equal. Many brands default to A/B testing, but this isn’t always the best approach, especially for large-scale campaigns or channels without deterministic attribution.

To ensure accurate and actionable results:

- Use Geo Experiments for regional impact analysis. By comparing regions with and without ad exposure, marketers can measure the incremental value of a specific marketing channel.

Example: “Does increasing TV ad spend in one city lead to higher online conversions compared to a control city?” - Leverage Conversion Lift Tests for digital campaign evaluation. These tests compare an exposed group to a control group to isolate ad-driven conversions.

Example: “How many additional sales did our Meta campaign generate beyond organic traffic?”

Apply Causal Impact Studies when A/B testing isn’t feasible. These studies use statistical modeling to estimate marketing impact, particularly for long sales cycles and offline conversions.

Example: “How much incremental revenue did our radio ads contribute over six months?”

By choosing the right experiment design, brands ensure valid insights that translate into real performance improvements.

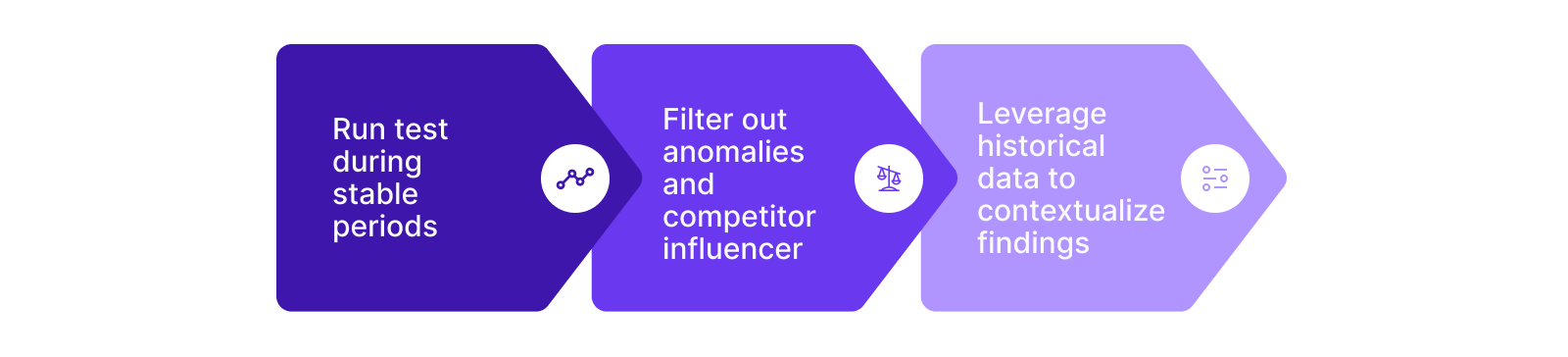

4. Control for External Variables

External factors like seasonality, competitor promotions, and market shifts can distort experimental results, leading to misleading conclusions. Without proper controls, marketers risk misattributing success or failure to the wrong variables.

To ensure your experiment delivers reliable and unbiased insights:

- Run tests during stable periods. Avoid running experiments during peak shopping seasons, major holidays, or other high-volatility periods that could artificially inflate or suppress results.

Example: “Measuring the impact of a new paid search strategy during Black Friday might not reflect its true performance in a normal period.” - Filter out anomalies and competitor influences. Sudden viral trends or aggressive competitor discounts can skew outcomes, making it essential to adjust for these unexpected disruptions.

Example: “A competitor’s flash sale could temporarily impact conversion rates ensuring proper control groups can help mitigate this effect.” - Leverage historical data to contextualize results. Comparing experiment findings against long-term trends helps account for recurring market fluctuations and ensures insights are rooted in real, sustainable performance.

Example: “Sales typically drop in Q1 for this industry—did the experiment truly fail, or was it influenced by seasonal decline?”

By proactively accounting for external influences, brands can extract meaningful insights that lead to smarter, more confident marketing decisions.

5. Ensure a Proper Sample Size and Duration

Rushing an experiment or testing on an audience that’s too small can lead to misleading insights. Without sufficient data, marketers risk making decisions based on noise rather than true performance trends.

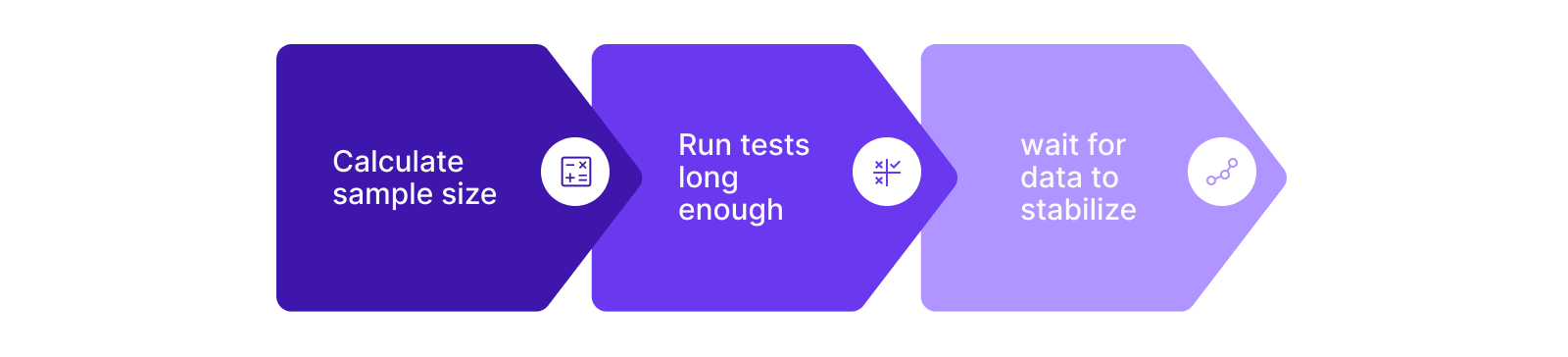

To ensure your experiment delivers statistically reliable insights:

- To ensure a reliable and statistically sound experiment, selecting the right set of treatment markets is crucial. Instead of focusing on individual users geo-experiments require carefully chosen geographic regions that represent the broader market. A poor selection of treatment markets can lead to skewed results, misattributing success or failure to external factors rather than the actual marketing intervention.

Example: “Testing a new ad format in a single small city may not provide scalable insights, but selecting a diverse mix of test and control markets accounting for population size, media consumption, and purchasing behavior ensures more reliable and actionable results.”

- Run tests long enough to capture full conversion cycles. Different channels and customer journeys operate on varying timelines. Ending an experiment too soon can lead to premature conclusions.

Example: “Subscription-based businesses may need weeks to assess retention effects, whereas e-commerce brands might see quicker purchase behaviors.”

- Wait for data to stabilize before making decisions. Early fluctuations in performance can be misleading, and concluding too soon may result in misinterpreted results. Beyond just waiting for the experiment period to conclude, incorporating a post-treatment period can be highly beneficial. This additional observation window helps capture any lagged effects of the treatment that may extend beyond the immediate test phase, ensuring a more comprehensive understanding of long-term impact.

Example: “A geo-experiment measuring the impact of TV ads may show an initial spike in search traffic, but the full effect on conversions may take weeks to materialize. Allowing for a post-treatment observation period ensures these delayed responses are accounted for before making final budget decisions.”

By ensuring the right sample size and duration, brands can avoid reactive decision-making and generate insights that lead to long-term marketing success.

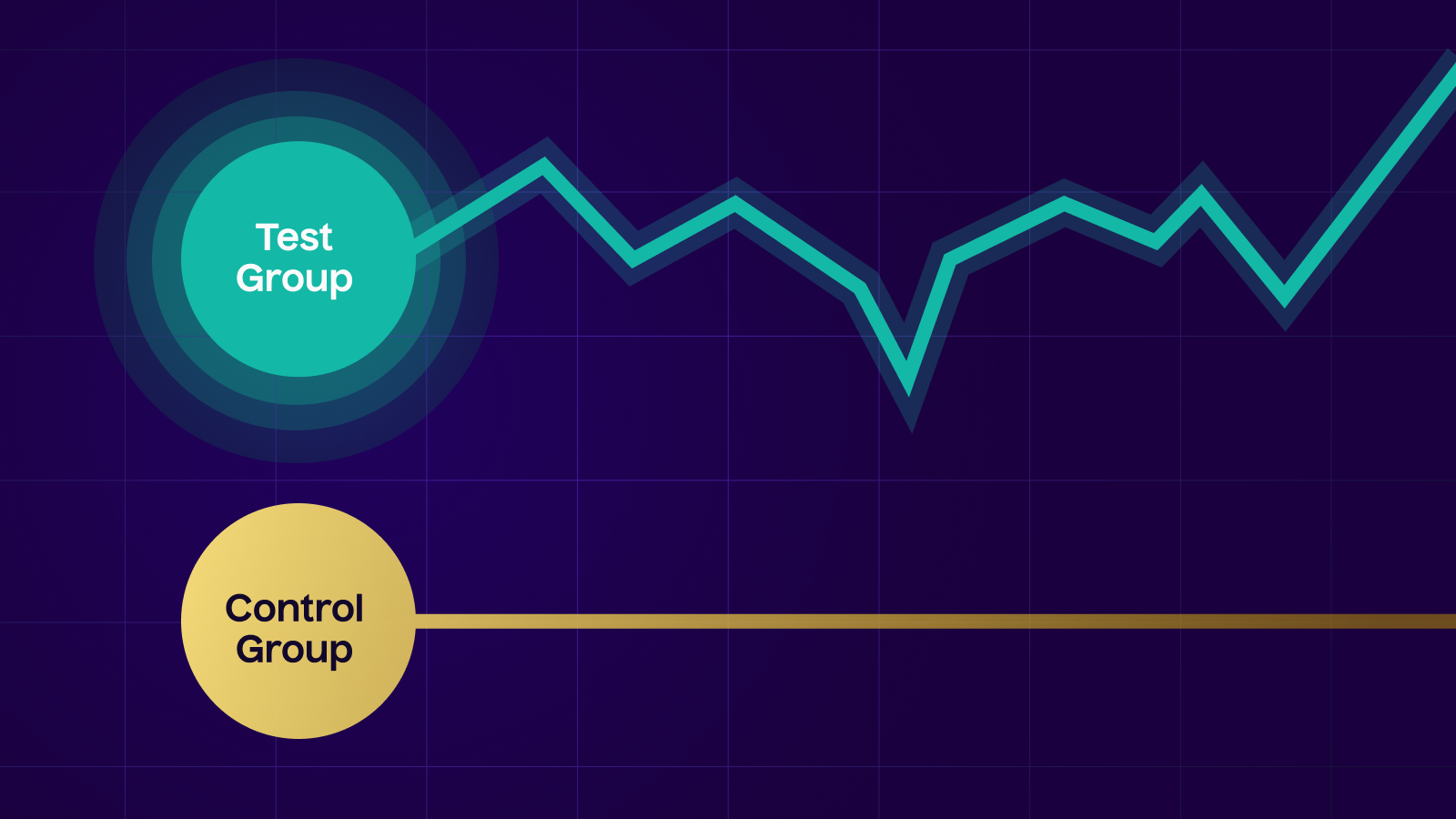

6. Set Up a Clean Holdout Group

Without a properly structured control group, it’s impossible to determine whether conversions were truly driven by advertising or if they would have happened organically. A poorly designed experiment risks overestimating the impact of media spend and misallocating budgets.

To ensure your experiment accurately measures incremental impact:

- Define a holdout group that receives no exposure to the test variable. This allows marketers to compare results between exposed and unexposed audiences to isolate the true effect of media spend.

Example: “For a Facebook campaign, a portion of the audience should be deliberately excluded from ad exposure to measure the lift in conversions.” - Ensure the holdout group is statistically representative. Control groups should mirror the characteristics of the test audience—demographics, behavior, and purchase intent must align to avoid skewed comparisons.

- Example: “If the test group consists of high-intent shoppers, but the holdout group includes casual browsers, the experiment results may be misleading.”

- Compare test and control group performance to quantify true lift. The difference in conversion rates between these groups reveals the actual impact of your campaign, separating ad-driven results from organic growth. However, not all observed differences are meaningful—random fluctuations can sometimes create the illusion of impact. To ensure reliable conclusions, use robust statistical techniques to determine whether the observed lift is statistically significant. Applying confidence intervals, p-values, or Bayesian inference helps assess whether the lift is a genuine effect of the campaign or just noise.

This step is critical in deciding whether to reject or validate the null hypothesis, ensuring that marketing decisions are based on proven, data-driven insights rather than chance.

Example: “If a geo-experiment shows a 10% increase in conversions in the treatment markets compared to the control, statistical testing can confirm whether this lift is significant or if it falls within expected random variation, preventing misallocation of budget based on misleading results.”

By setting up a clean and well-matched holdout group, brands can distinguish real media impact from noise and make smarter, data-driven budget decisions.

7. Validate Results with Multiple Experiments

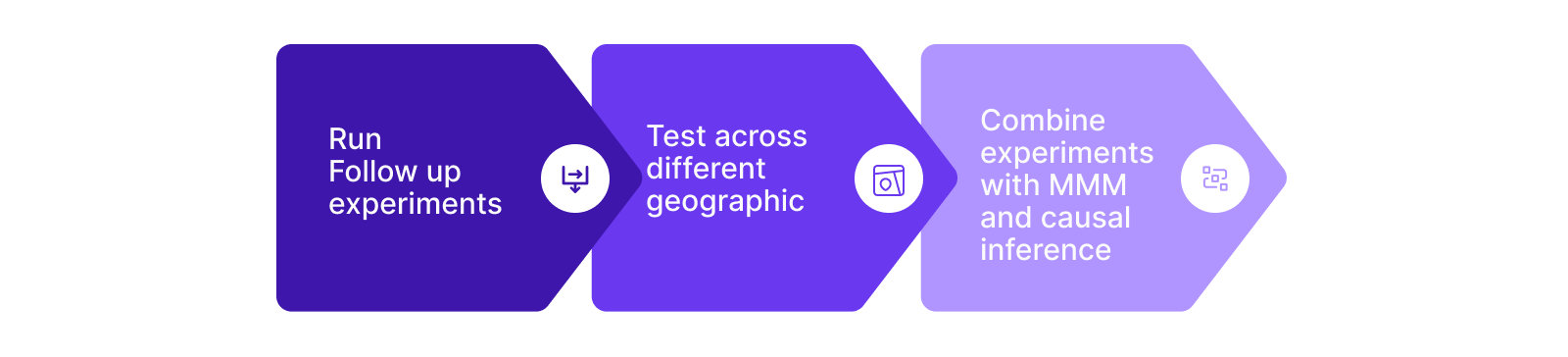

Relying on a single experiment to make high-stakes marketing decisions is risky. Consumer behavior, market conditions, and external factors can all influence results, making it essential to validate findings through multiple tests. One-off experiments may lead to misleading conclusions, causing brands to either scale ineffective strategies or abandon high-potential opportunities too soon.

To ensure reliable and repeatable insights:

- Run follow-up experiments to confirm findings. A successful test today may not yield the same results in a different market or season. Repeating experiments reduces the chances of false positives and ensures consistency in insights.

Example: “If an ad campaign drives a 20% conversion lift in Q1, retest in Q3 to check if the effect holds or was influenced by seasonal demand.” - Test across different geographies, audience segments, and campaign types. Different regions and customer groups respond differently to marketing efforts. Expanding tests across multiple segments ensures that results are scalable and generalizable.

Example: “A discount campaign may work well for new customers but have little impact on loyal buyers. Testing separately helps refine the right offer for each segment.” - Combine experimentation with other measurement methodologies. No single measurement approach is foolproof. Pairing incrementality experiments with MMM and causal attribution provides a comprehensive view of marketing performance.

Example: “If an experiment suggests that social ads drive a 15% sales lift, validate this by cross-referencing with MMM trends to ensure alignment.”

By continuously validating results across multiple tests, brands can move beyond isolated successes or failures and build a repeatable, scalable measurement framework for long-term growth.

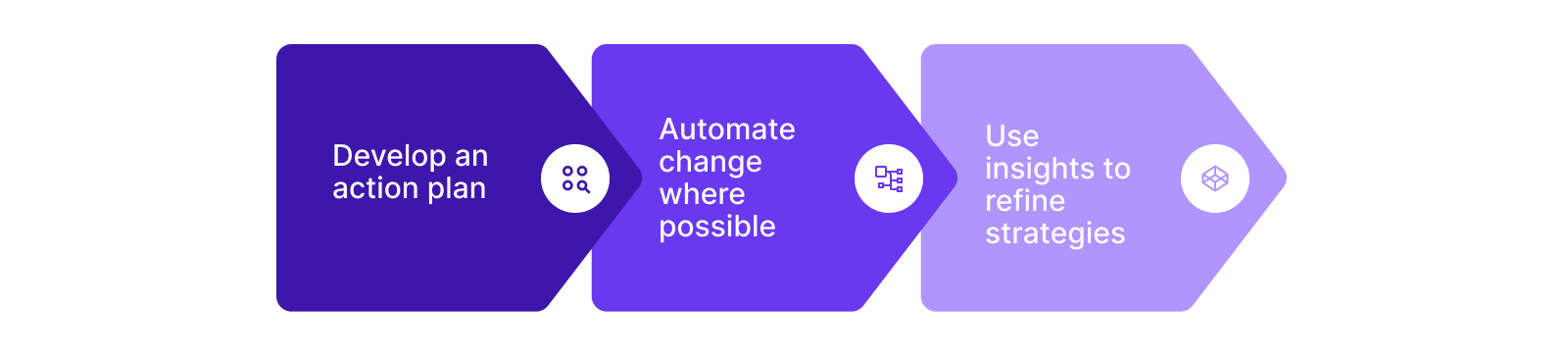

8. Translate Insights into Action

Running experiments is only half the battle, failing to act on the results renders them useless. Many brands invest in testing but struggle to translate findings into concrete marketing optimizations, leaving potential performance gains untapped. Insights should drive immediate, measurable improvements in campaign strategy, budget allocation, and execution.

To ensure experiments lead to real business impact:

- Develop a structured plan for incorporating insights into future campaigns. Instead of letting results sit in a report, create clear action steps for how learnings will be applied.

Example: “If an incrementality test reveals that organic search drives 30% of conversions, shift budget away from branded paid search and reinvest in awareness campaigns.” - Automate changes where possible. AI-driven marketing intelligence can use test results to dynamically optimize campaigns in real-time, removing the manual effort of applying insights.

Example: “If an AI-powered system detects diminishing returns on a Facebook campaign based on experiment data, it can automatically reallocate spend to a higher-performing channel.” - Use insights to refine overall marketing strategies and continuously optimize. Experiments shouldn’t be one-off projects—they should inform long-term decision-making and strategic shifts.

Example: “If A/B testing shows that higher-quality creatives drive a 15% increase in engagement, invest more in premium content production for future campaigns.”

Turning insights into actionable next steps ensures that experiments don’t just validate past decisions they actively drive smarter, more effective marketing strategies in the future.

Final Thoughts

Experiments are critical to making data-backed decisions that drive real business impact. Marketers can confidently optimize their advertising investments by designing tests with clear objectives, choosing the right methodologies, ensuring accurate measurement, and continuously validating insights.

Ready to elevate your measurement strategy? Explore Lifesight Incrementality testing solution to take your experimentation to the next level.

You may also like

Essential resources for your success