Marketing is witnessing a significant transformation as traditional identifiers and user-level tracking methods are increasingly deprecated. Moreover, the marketing journey is a perpetual cycle of discovery, learning, and feedback. Even with marketing innovation accounting for over 20% of marketing budgets, many marketers still grapple with the true definition of the phrase.

In this evolving landscape marketing experimentation emerges as an essential strategy. It equips you to measure the impact of your campaigns by and using the results to learn, iterate, and optimize.

But what consists of “experiments” in this context? What role do they play within the marketing measurement toolkit?

In this blog post, we’ll explore those questions, discuss the different forms and benefits of marketing experiments, address the challenges encountered, and make predictions.

What Are Experiments in Marketing?

Experiments, in marketing are structured approaches that enable teams to test and refine hypotheses iteratively within their marketing strategies. This testing can cover a range of elements, from messaging and channel selection to audience targeting and creative execution. Additionally, experiments can incorporate incrementality testing, such as geographical or split testing, to directly measure the additional impact of one variable over another.

That way, you can execute innovative campaigns and be confident of their success, as you’re informed by evidence rather than intuition alone. Conducting marketing experiments also helps save costs, as you don’t spend on things that don’t work.

Different Types of Marketing Experiments

Although the following isn’t exhaustive, here are the most common experiments performed by marketers:

1) A/B testing

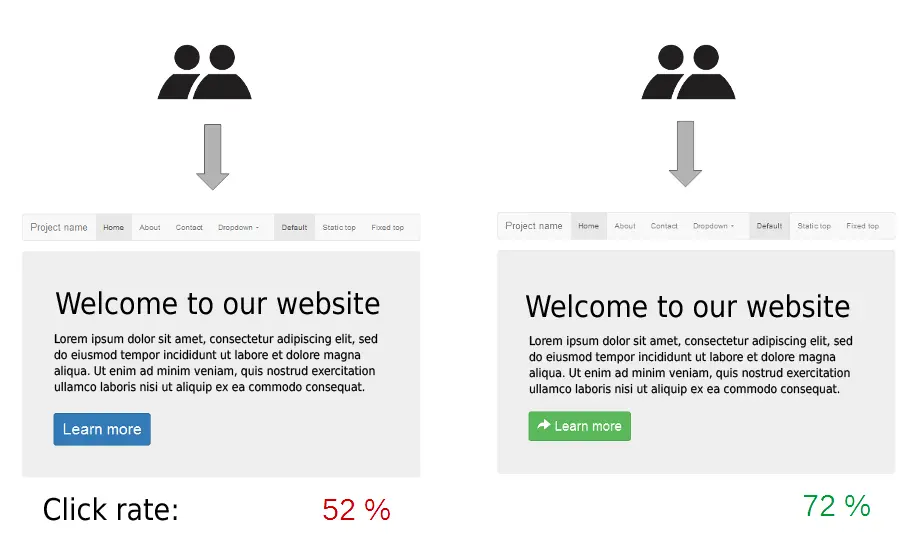

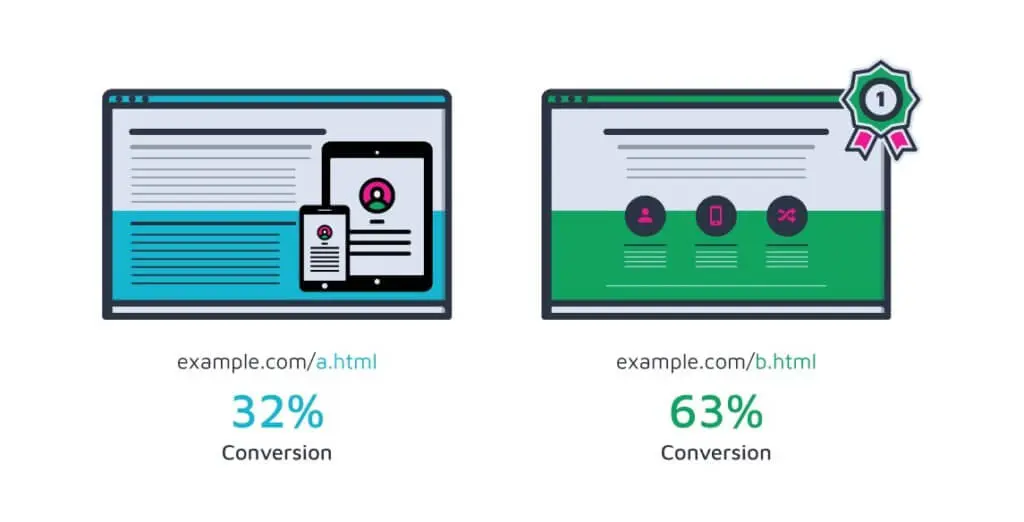

A/B testing, or split testing, is a randomized controlled experiment where you test two versions of an element to see which version performs better. It’s used in marketing, web development, and user experience (UX).

For example, different landing page layouts can be compared on websites to assess visitor behavior, such as how long they stay on the page or which buttons they click.

ab-testing-example-of-landing-pages

Other A/B testing examples include call-to-action (CTA) buttons, email subject lines, and ad placements.

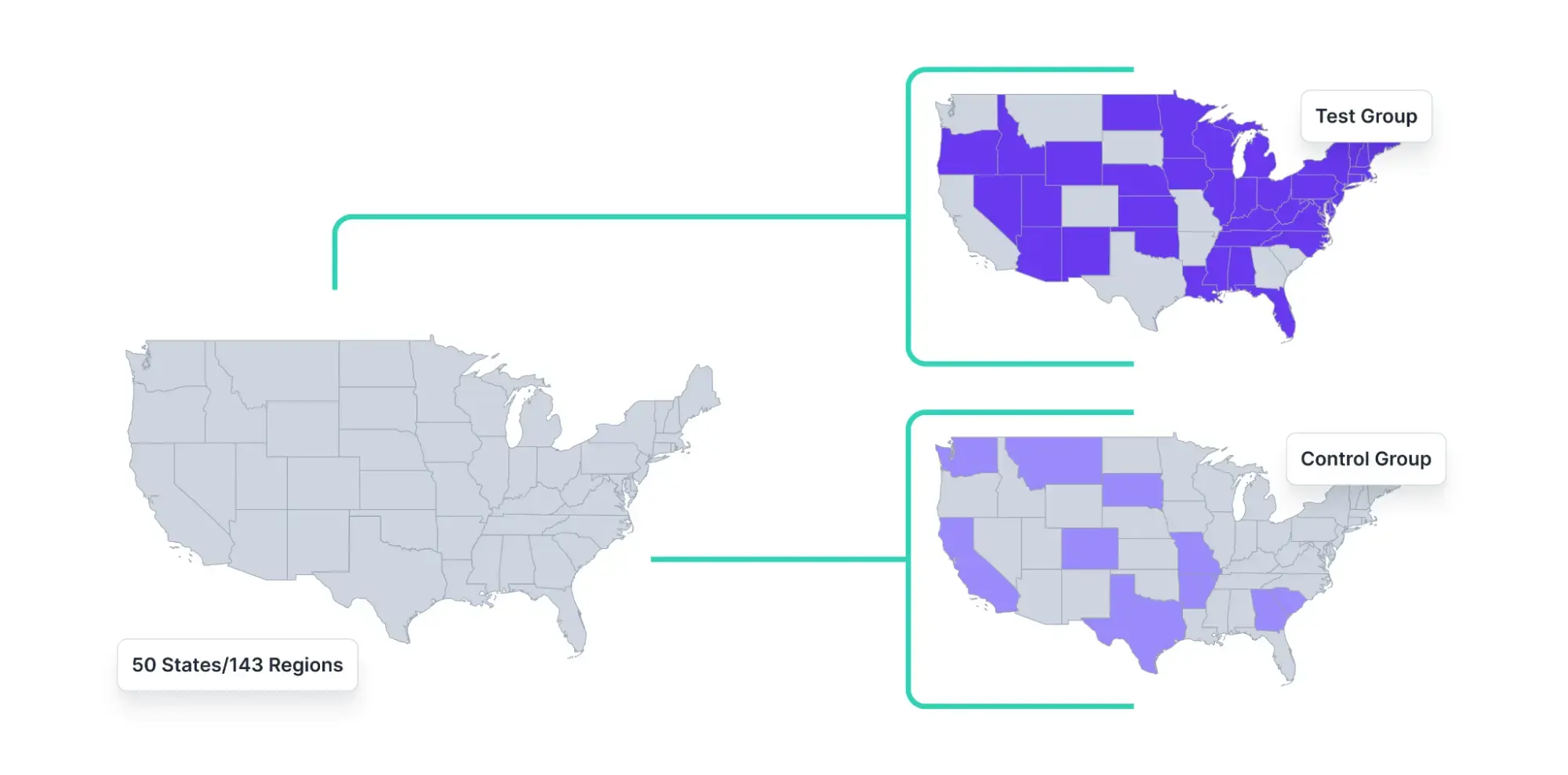

2) Geo experiments

Also known as Matched Market Test (MMT), geo experiments enable you to analyze the actual impact of your ad campaign within a specific geographic market.

marketing-experiment-type-geo-experiments

It typically involves increasing or decreasing your ad spend in that area or even stopping your campaign altogether. You only then closely monitor the changes in sales, signups, downloads, or any other relevant KPIs within this market.

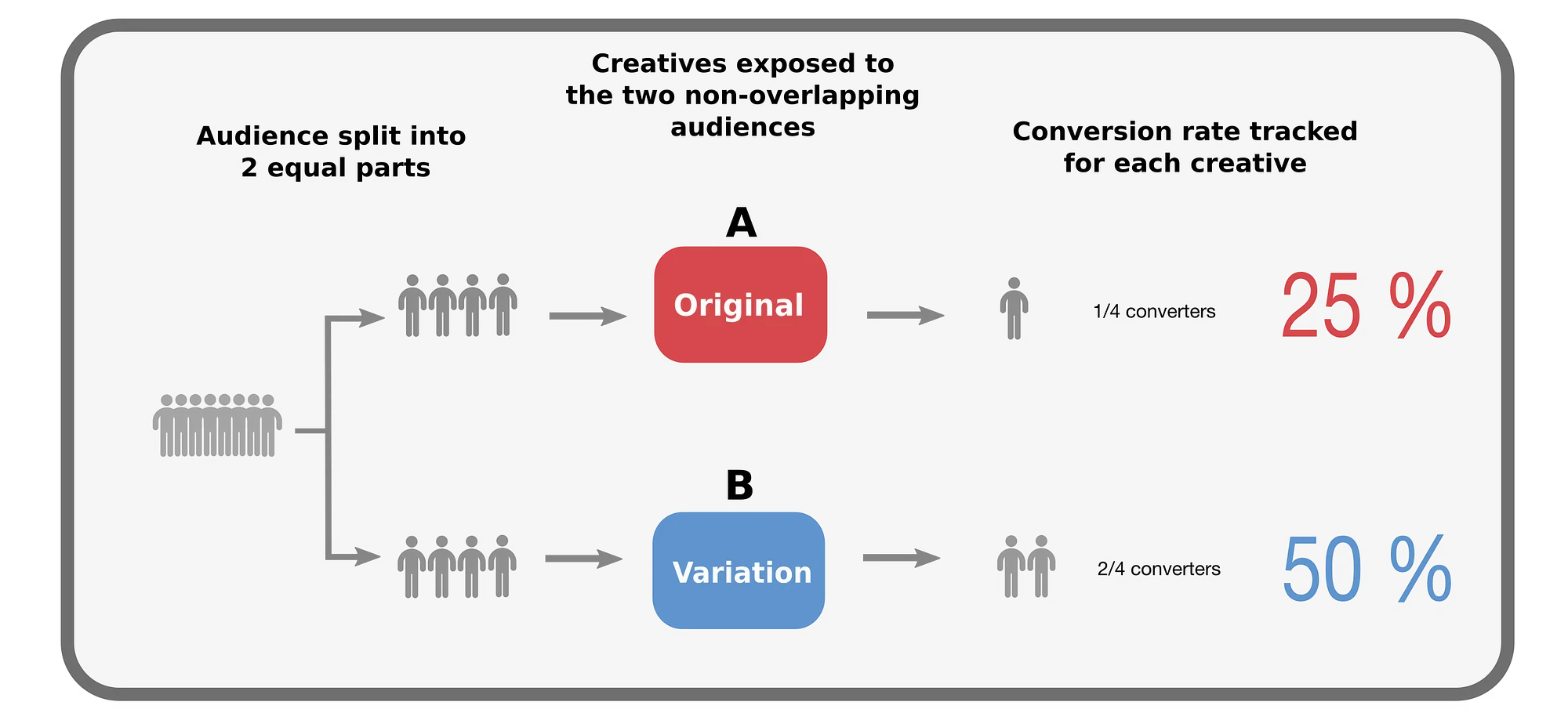

3) Audience split testing

It is a variation of A/B testing that involves dividing your audience into segments based on:

- Psychography: Personality traits, attitudes, and interests

- Demography: Age, gender, and income level

- Behavior: Purchase history, brand interactions, and product usage rates

Audience split testing can compare how two segments react to an entire product, feature set, or campaign rather than different variations of the same element (like an email subject line).

audience-split-testing-comparision-of-two-segments

For instance, it can help in product development by guiding the prioritization of diverse product features or offerings based on audience preferences.

4) Multivariate testing

This advanced testing method enables you to simultaneously test multiple variables or elements within a landing page, email, or any other marketing asset to determine which combination of changes produces the best outcome.

marketing-experiment-type-multivariate-testing

Unlike A/B testing, which compares the performance of two versions of a single variable, multivariate testing examines how different elements interact and the overall effect on user behavior or conversion rates.

5) Split URL testing

Also known as redirect testing, this involves testing separate URLs to see which one performs better. The testing variations include different page designs, navigational frameworks, or entirely different content approaches hosted on separate URLs.

split-url-testing

Instead of viewing a variation of the same webpage (as seen in A/B testing), the audience is directed to one of the two unique URLs in split URL testing.

Scientific Method Applied in Marketing Experiments

Conducting a marketing experiment requires a structured approach to produce desirable results. It comprises four stages:

1) Hypothesis formulation

First, identify a specific marketing problem or opportunity. For example, if you notice a poor-performing landing page on your site, define a hypothesis suggesting a potential solution or explanation.

It could be as straightforward as “switching the CTA button color from blue to red to boost the landing page’s conversion rate.”

2) Experimentation

Next, select an appropriate method (such as A/B testing) to test your marketing hypothesis. Define control and experimental groups and clarify the data you want to collect during the process.

Experiment by introducing different variations to respective groups; for example, some users see a blue CTA button, and others see a red one.

Ensure you test both landing page versions over a period of time and that the sample sizes are large enough to achieve statistical relevance.

3) Observation

Gather data from the experiment, such as the number of clicks on the CTA button, session durations, bounce rates, and other key metrics.

To determine differences in outcomes, use statistical methods like T-tests, Analysis of Variance (ANOVA), or regression analysis to compare the performance of the control group with that of the experimental group.

4) Conclusion

Analyze the data to determine if your hypothesis stands. If the red CTA button boosts conversions for the landing page, then the theory is validated. Make sure to adopt this change across all relevant marketing materials.

If the hypothesis is disproven, use the insights gained to refine it or explore new hypotheses. Lastly, document the process, findings, and actions taken. This will help you learn from each experiment and inform future marketing strategies.

The Role of Experiments in the Measurement Toolkit

Insights gained from experiments are valuable for your current campaign and informing your future marketing initiatives. By continuously iterating and improving your strategies based on what you learn from your experiments, you can enable growth for your team.

Let’s explore the role experiments play in the measurement framework.

1) Strategic integration with traditional tools

Traditional measurement tools like multi-touch attribution (MTA) provide valuable insights into how various marketing touchpoints contribute to the customer’s decision to convert.

However, these tools often rely on historical data and predetermined algorithms, which may not fully account for the changing dynamics of consumer behavior or the interactive effects of different channels.

Therefore, experiments, particularly incrementality tests, should not exist in isolation but rather be integrated with these traditional tools. This may involve technical setup for data sharing and alignment on KPIs.

For example, to assess the true effectiveness of your paid search ad campaign, conduct an incrementality test, dividing your audience into a control group that does not see the ads and a test group that does.

If the test reveals a significant increase in conversions (e.g., sales, signups, demo trials, etc.) attributable directly to the paid search ads, far beyond what the MTA model initially indicated, it means the model underestimated the impact of paid search on driving conversions.

pan-wu-linkedin-post-on-incrementality

Therefore, you can use this opportunity to integrate more granular data and refine the parameters within your MTA model to capture customer behavior driven by ads.

You could incorporate additional touchpoints like video views, chatbot interactions, and social media interactions, adjust the attribution weight given to various marketing channels, or employ more sophisticated analytical techniques to understand the customer journey.

Alternatively, use this checklist to boost ROI with incrementality testing.

2) Validation of analytics data

Analytics and measurement platforms provide a wealth of data, but without validation, there’s always a risk of making decisions based on inaccurate or incomplete information.

Controlled experiments allow you to test, validate, and refine your hypotheses in a real-world setting. You can directly measure the effect of specific marketing activities, ensuring the data driving your decisions is accurate and actionable.

For example, if analytics suggest a high conversion rate from email marketing, an A/B test could validate this effect.

Create two versions of the email: one (Version A) remains unchanged as the control, and the other (Version B) has slight modifications in the CTA placement or offer.

After sending these versions to similar audience segments, the analytics insights are validated if the test confirms that Version A (the original high-performing email) drives higher conversions than expected.

3) Calibration of Marketing Mix Models (MMMs)

MMMs, by design, analyze historical data to deduce how different marketing variables contribute to sales volume over time.

However, without a clear understanding of causality, particularly whether a marketing activity genuinely drives sales or merely correlates with them, MMMs can misinterpret the effectiveness of different channels.

Incrementality tests, which involve controlled experiments like A/B testing, fill this gap by precisely isolating the effect of a single variable (e.g., an ad campaign) on outcomes. They do this by comparing a treated group (exposed to the campaign) against a control group (not exposed), providing reliable insights.

Integrating incrementality testing into MMM calibration involves employing the results from these tests to adjust the coefficients or weights assigned to marketing channels within the model.

Suppose an experiment shows that social media ads boost sales. In such a case, this insight can adjust the MMM, increasing the weight or influence attributed to social media ads in the model.

This recalibration ensures the model more accurately reflects the actual effectiveness of each marketing channel in driving sales, leading to smarter, more effective budget allocation and strategy development. This process holds the promise of significantly improving your marketing outcomes.

Benefits of Running Marketing Experiments

Experimentation is important for the following reasons:

1) Objective assessment of marketing strategies and tactics

Running controlled experiments helps you base your marketing decisions on what truly works for your specific audience and market context rather than on assumptions or industry norms.

This objective approach also helps you make rational decisions that aren’t overly influenced by your personal preferences or anecdotal evidence.

2) Enhanced understanding of customer behavior and preferences

Marketing experiments offer a direct window into the customer’s world, revealing how they react to different messages, offers, and experiences. This insight is invaluable for tailoring marketing efforts to meet customer needs effectively.

By understanding which aspects of a campaign resonate with the audience, you fine-tune your messaging, design, and delivery channels to enhance engagement and conversion rates.

3) Optimization of marketing spend by focusing on strategies that deliver measurable outcomes

Trends are fleeting in this day and age, and what worked yesterday might not work tomorrow. Experiments help you stay in tune with the changes and allocate your budget toward campaigns and channels that have proven their worth in terms of engagement, conversions, and sales.

This optimization level maximizes the impact of the marketing spend and minimizes waste, ensuring that every dollar contributes to the bottom line.

Common Challenges in Setting up and Running Effective Marketing Experiments

The following three factors can adversely affect the success of your experiments:

1) Not keeping bias at bay

Biases can skew the results of a marketing experiment. In survey design, relying solely on multiple-choice questions may limit responses and reflect the surveyor’s expectations rather than genuine customer feedback.

To counteract this, include open-ended questions that allow for spontaneous insights. Additionally, diverse sampling methods and blind testing should be considered to reduce bias further and ensure more credible outcomes.

2) Not defining a clear hypothesis

A clear, measurable hypothesis is crucial for predicting the outcome of marketing experiments. It should detail the anticipated effect on a specific metric rather than being a general statement.

For instance, rather than saying, “Feature X will improve user experience,” specify, “Adding a CTA to our tax season landing page will increase signups by 60%.”

Ensure your hypothesis is an objective statement and not a question. Then, it clearly outlines the expected result and how it will be measured.

Carlos Flavia, Professor of Marketing at the University of Zaragoza, emphasizes proving a significant link between two variables:

carlos-flavia-linkedin-post-on-a-significant-relation-between-two-variables

3) Relying only on a single experiment result

A standard and significant error in marketing research is basing conclusions on a single experiment. This approach risks drawing inaccurate deductions due to insufficient evidence. To identify genuine trends and eliminate outliers:

- Conduct your experiments multiple times

- Spread these repetitions over some time

- Ensure your results have enough data to be considered statistically reliable

Consider this: if an outcome occurs once, it might be a fluke. If it happens twice, perhaps it’s a coincidence. But three times? That suggests a trend. Repetitive testing helps observe consistent patterns and trends, leading to more accurate conclusions.

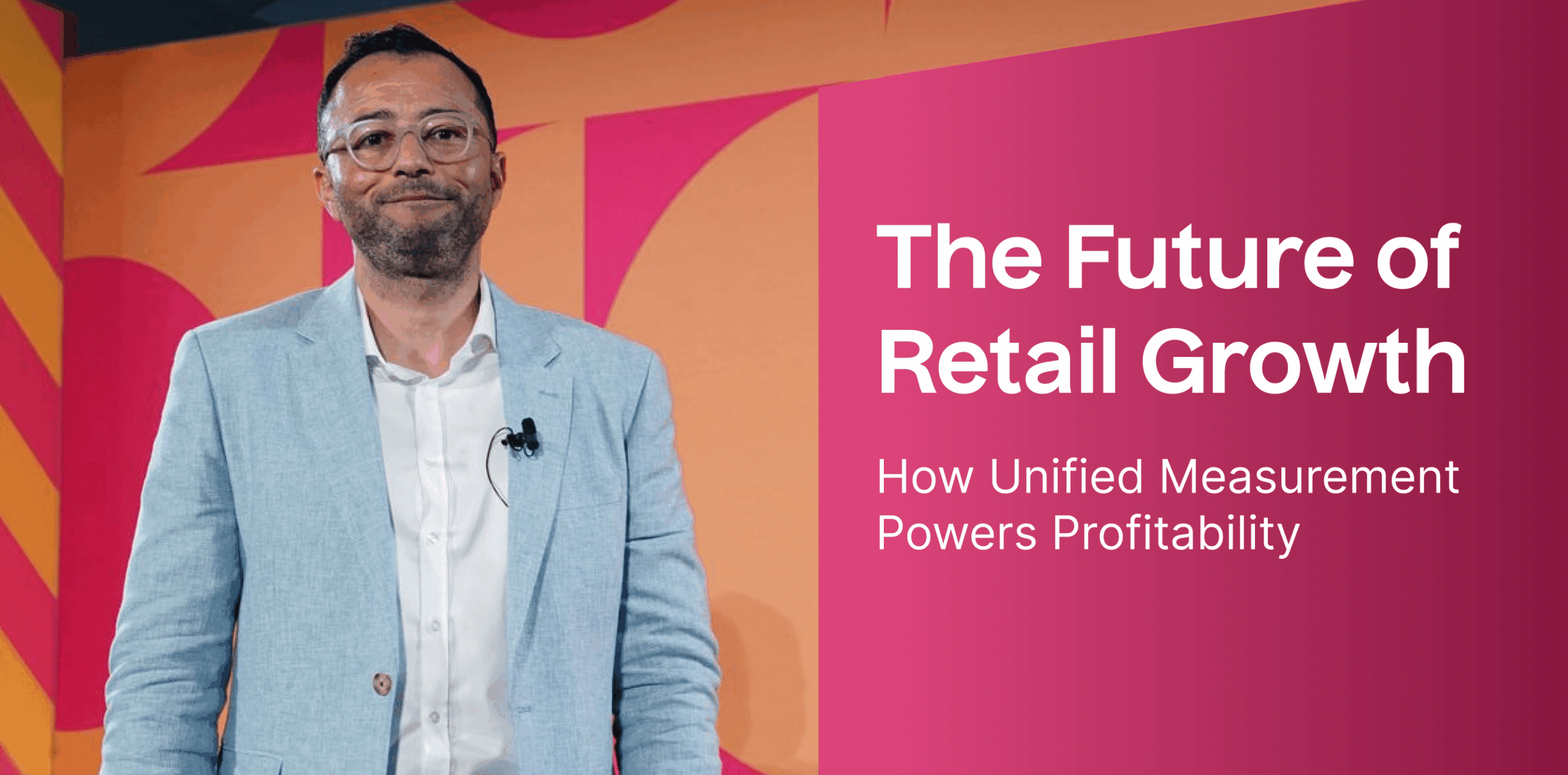

The Future of Marketing Experiments

- AI-driven hypotheses generation: Since AI algorithms can sift through vast consumer datasets, they can uncover specific patterns, trends, and anomalies that human analysts might overlook. This information helps formulate more targeted hypotheses for marketing experiments tailored to individual or segment-specific needs and desires.

- Automated experiment platforms: These can handle many experiments simultaneously, automating repetitive tasks, such as sending surveys, collecting responses, and analyzing data. They also enable the testing of varied hypotheses across different segments and channels without overwhelming the team.

- Ethical AI and consumer privacy: You must prioritize transparency with consumers about how their data is used and ensure that consent is obtained in straightforward and understandable terms. Implement robust data security measures to protect consumer information from breaches and misuse. The use of AI technologies ensures algorithms do not perpetuate biases or inequalities.

Read about: What is Marketing Measurement and how does it work?

Marketing Works Best When Led by Evidence

The critical function of experiments within a broad-based marketing measurement toolkit cannot be overstated. These tests serve as the cornerstone for marketers aiming to verify, fine-tune, and improve their strategies.

To benefit firsthand from the transformative impact of data-driven decision-making on your marketing outcomes, book a demo with Lifesight, a unified marketing measurement platform.

Discover how we can empower your marketing experiments (and strategies along the way) with precision and scalability, driving unprecedented growth for your business.

You may also like

Essential resources for your success